How To Create A Print Server Cluster In Windows 2016

Physical Hardware Requirements -Up to 23 instances of SQL Server requires the following resource:

- Use basic disks, not dynamic disks.

- Use NTFS partition.

- Use either master boot record (MBR) or GUID partition table (GPT).

- Storage volume larger than 2 terabytes, use GUID partition table (GPT).

- Storage volumes smaller than 2 terabytes, use master boot record (MBR).

- 4 disks for 23 instances of SQL Server as a cluster disk array would require 92 disks.

- Cluster storage must not be Windows Distributed File System (DFS)

Software Requirements

Download SQL Server 2012 installation media . Review SQL Server 2012 Release Notes . Install the following prerequisite software on each failover cluster node and then restart nodes once before running Setup.

- Windows PowerShell 2.0

- .NET Framework 3.5 SP1

- .NET Framework 4

Active Directory Requirements

- Cluster nodes must be member of same Active Directory Domain Services

- The servers in the cluster must use Domain Name System (DNS) for name resolution

- Use cluster naming convention for example Production Physical Node: DC1PPSQLNODE01 or Production virtual node DC2PVSQLNODE02

- Do not include cluster name with these characters like <, >, ",',&

- Never install SQL server on a Domain Controller

- Never install cluster services in a domain controller or Forefront TMG 2010

Unsupported Configuration

the following are the unsupported configuration:

Permission Requirements

System admin or project engineer who will be performing the tasks of creating cluster must be a member of at least Domain Users security group with permission to create domain computers objects in Active Directory and must be a member of administrators group on each clustered server.

Network settings and IP addresses requirements

you need at least two network card in each cluster node. One network card for domain or client connectivity and another network card heartbeat network which is shown below.

The following are the unique requirements for MS cluster.

Use identical network settings on each node such as Speed, Duplex Mode, Flow Control, and Media Type.

Ensure that each of these private networks uses a unique subnet.

Ensure that each node has heartbeat network with same range of IP address

Ensure that each node has unique range of subnet whether they are placed in single geographic location of diverse location.

Domain Network should be configured with IP Address, Subnet Mask, Default Gateway and DNS record.

Heartbeat network should be configured with only IP address and subnet mask.

Additional Requirements

Verify that antivirus software is not installed on your WSFC cluster.

Ensure that all cluster nodes are configured identically, including COM+, disk drive letters, and users in the administrators group.

Verify that you have cleared the system logs in all nodes and viewed the system logs again.

Ensure that the logs are free of any error messages before continuing.

Before you install or update a SQL Server failover cluster, disable all applications and services that might use SQL Server components during installation, but leave the disk resources online.

SQL Server Setup automatically sets dependencies between the SQL Server cluster group and the disks that will be in the failover cluster. Do not set dependencies for disks before Setup.

If you are using SMB File share as a storage option, the SQL Server Setup account must have Security Privilege on the file server. To do this, using the Local Security Policy console on the file server, add the SQL Server setup account to Manage auditing and security log rights.

Supported Operating Systems

Windows Server 2012 64-bit x64 Datacenter

Windows Server 2012 64-bit x64 Standard

Windows Server 2008 R2 SP1 64-bit x64 Datacenter

Windows Server 2008 R2 SP1 64-bit x64 Enterprise

Windows Server 2008 R2 SP1 64-bit x64 Standard

Windows Server 2008 R2 SP1 64-bit x64 Web

Understanding Quorum configuration

In a simple definition, quorum is a voting mechanism in a Microsoft cluster. Each node has one vote. In a MSCS cluster, this voting mechanism constantly monitor cluster that how many nodes are online and how nodes are required to run the cluster smoothly. Each node contains a copy of cluster information and their information is also stored in witness disk/directory. For a MSCS, you have to choose a quorum among four possible quorum configurations.

Node Majority- Recommended for clusters with an odd number of nodes.

Node and Disk Majority – Recommended for clusters with an even number of nodes. Can sustain (Total no of Node)/2 failures if a disk witness node is online. Can sustain ((Total no of Node)/2)-1 failures if a disk witness node is offline.

Node and File Share Majority- Clusters with special configurations. Works in a similar way to Node and Disk Majority, but instead of a disk witness, this cluster uses a file share witness.

No Majority: Disk Only (not recommended)

Why quorum is necessary? Network problems can interfere with communication between cluster nodes. This can cause serious issues. To prevent the issues that are caused by a split in the cluster, the cluster software requires that any set of nodes running as a cluster must use a voting algorithm to determine whether, at a given time, that set has quorum. Because a given cluster has a specific set of nodes and a specific quorum configuration, the cluster will know how many "votes" constitutes a majority (that is, a quorum). If the number drops below the majority, the cluster stops running. Nodes will still listen for the presence of other nodes, in case another node appears again on the network, but the nodes will not begin to function as a cluster until the quorum exists again.

Understanding a multi-site cluster environment

Hardware: A multi-site cluster requires redundant hardware with correct capacity, storage functionality, replication between sites, and network characteristics such as network latency.

Number of nodes and corresponding quorum configuration: For a multi-site cluster, Microsoft recommend having an even number of nodes and, for the quorum configuration, using the Node and File Share Majority option that is, including a file share witness as part of the configuration. The file share witness can be located at a third site, that is, a different location from the main site and secondary site, so that it is not lost if one of the other two sites has problems.

Network configuration—deciding between multi-subnets and a VLAN: configuring a multi-site cluster with different subnets is supported. However, when using multiple subnets, it is important to consider how clients will discover services or applications that have just failed over. The DNS servers must update one another with this new IP address before clients can discover the service or application that has failed over. If you use VLANs with multi-site you must reduce the Time to Live (TTL) of DNS discovery.

Tuning of heartbeat settings: The heartbeat settings include the frequency at which the nodes send heartbeat signals to each other to indicate that they are still functioning, and the number of heartbeats that a node can miss before another node initiates failover and begins taking over the services and applications that had been running on the failed node. In a multi-site cluster, you might want to tune the "heartbeat" settings. You can tune these settings for heartbeat signals to account for differences in network latency caused by communication across subnets.

Replication of data: Replication of data between sites is very important in a multi-site cluster, and is accomplished in different ways by different hardware vendors. Therefore, the choice of the replication process requires careful consideration. There are many options you will find while replicating data. But before you make any decision, consult with your storage vendor, server hardware vendor and software vendors. Depending on vendor like NetApp and EMC, your replication design will change. Review the following considerations:

Choosing replication level (block, file system, or application level): The replication process can function through the hardware (at the block level), through the operating system (at the file system level), or through certain applications such as Microsoft Exchange Server (which has a feature called Cluster Continuous Replication or CCR). Work with your hardware and software vendors to choose a replication process that fits the requirements of your organization.

Configuring replication to avoid data corruption: The replication process must be configured so that any interruptions to the process will not result in data corruption, but instead will always provide a set of data that matches the data from the main site as it existed at some moment in time. In other words, the replication must always preserve the order of I/O operations that occurred at the main site. This is crucial, because very few applications can recover if the data is corrupted during replication.

Choosing between synchronous and asynchronous replication: The replication process can be synchronous, where no write operation finishes until the corresponding data is committed at the secondary site, or asynchronous, where the write operation can finish at the main site and then be replicated (as a background operation) to the secondary site.

Synchronous Replication means that the replicated data is always up-to-date, but it slows application performance while each operation waits for replication. Synchronous replication is best for multi-site clusters that can are using high-bandwidth, low-latency connections. Typically, this means that a cluster using synchronous replication must not be stretched over a great distance. Synchronous replication can be performed within 200km distance where a reliable and robust WAN connectivity with enough bandwidth is available. For example, if you have GigE and Ten GigE MPLS connection you would choose synchronous replication depending on how big is your data.

Asynchronous Replication can help maximize application performance, but if failover to the secondary site is necessary, some of the most recent user operations might not be reflected in the data after failover. This is because some operations that were finished recently might not yet be replicated. Asynchronous replication is best for clusters where you want to stretch the cluster over greater geographical distances with no significant application performance impact. Asynchronous replication is performed when distance is more than 200km and WAN connectivity is not robust between sites.

Utilizing Windows Storage Server 2012 as shared storage

Windows® Storage Server 2012 is the Windows Server® 2012 platform of choice for network-attached storage (NAS) appliances offered by Microsoft partners.

Windows Storage Server 2012 enhances the traditional file serving capabilities and extends file based storage for application workloads like Hyper-V, SQL, Exchange and Internet information Services (IIS). Windows Storage Server 2012 provides the following features for an organization.

Workgroup Edition

As many as 50 connections

Single processor socket

Up to 32 GB of memory

As many as 6 disks (no external SAS)

Standard Edition

No license limit on number of connections

Multiple processor sockets

No license limit on memory

No license limit on number of disks

De-duplication, virtualization (host plus 2 virtual machines for storage and disk management tools), and networking services (no domain controller)

Failover clustering for higher availability

Microsoft BranchCache for reduced WAN traffic

Presenting Storage from Windows Storage Server 2012 Standard

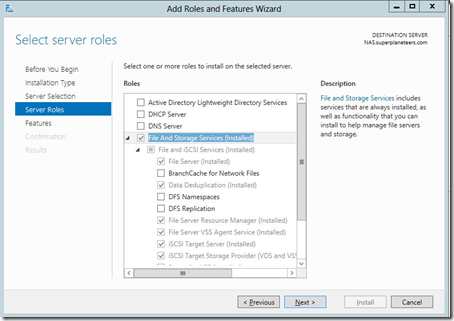

From the Server Manager, Click Add roles and features, On the Before you begin page, Click Next. On the installation type page, Click Next.

On the Server Roles Selection page, Select iSCSI Target and iSCSI target storage provider, Click Next

On the Feature page, Click Next. On the Confirm page, Click Install. Click Close.

On the Server Manager, Click File and Storage Services, Click iSCSI

On the Task Button, Click New iSCSI Target, Select the Disk drive from where you want to present storage, Click Next

Type the Name of the Storage, Click Next

Type the size of the shared disk, Click Next

Select New iSCSI Target, Click Next

Type the name of the target, Click Next

Select the IP Address on the Enter a value for selected type, Type the IP address of cluster node, Click Ok. Repeat the process and add IP address for the cluster nodes.

Type the CHAP information. note that CHAP password must be 12 character. Click Next to continue.

Click Create to create a shared storage. Click Close once done.

Repeat the step to create all shared drive of your preferred size and create a shared drive of 2GB size for quorum disk.

Deploying a Failover Cluster in Microsoft environment

Step1: Connect the cluster servers to the networks and storage

1. Review the details about networks in Hardware Requirements for a Two-Node Failover Cluster and Network infrastructure and domain account requirements for a two-node failover cluster, earlier in this guide.

2. Connect and configure the networks that the servers in the cluster will use.

3. Follow the manufacturer's instructions for physically connecting the servers to the storage. For this article, we are using software iSCSI initiator. Open software iSCSI initiator from Server manager>Tools>iSCSI Initiator. Type the IP address of target that is the IP address of Microsoft Windows Storage Server 2012. Click Quick Connect, Click Done.

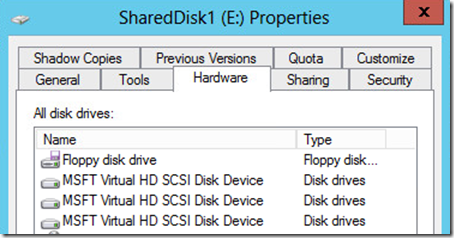

5. Open Computer Management, Click Disk Management, Initialize and format the disk using either MBR and GPT disk type. Go to second server, open Computer Management, Click Disk Management, bring the disk online simply by right clicking on the disk and clicking bring online. Ensure that the disks (LUNs) that you want to use in the cluster are exposed to the servers that you will cluster (and only those servers).

6. On one of the servers that you want to cluster, click Start, click Administrative Tools, click Computer Management, and then click Disk Management. (If the User Account Control dialog box appears, confirm that the action it displays is what you want, and then click Continue.) In Disk Management, confirm that the cluster disks are visible.

7. If you want to have a storage volume larger than 2 terabytes, and you are using the Windows interface to control the format of the disk, convert that disk to the partition style called GUID partition table (GPT). To do this, back up any data on the disk, delete all volumes on the disk and then, in Disk Management, right-click the disk (not a partition) and click Convert to GPT Disk.

8. Check the format of any exposed volume or LUN. Use NTFS file format.

Step 2: Install the failover cluster feature

In this step, you install the failover cluster feature. The servers must be running Windows Server 2012.

1. Open Server Manager, click Add roles and features. Follow the screen, go to Feature page.

2. In the Add Features Wizard, click Failover Clustering, and then click Install.

4. Follow the instructions in the wizard to complete the installation of the feature. When the wizard finishes, close it.

5. Repeat the process for each server that you want to include in the cluster.

Step 3: Validate the cluster configuration

Before creating a cluster, I strongly recommend that you validate your configuration. Validation helps you confirm that the configuration of your servers, network, and storage meets a set of specific requirements for failover clusters.

1. To open the failover cluster snap-in, click Server Manager, click Tools, and then click Failover Cluster Manager.

2. Confirm that Failover Cluster Manager is selected and then, in the center pane under Management, click Validate a Configuration. Click Next.

3. On the Select Server Page, type the fully qualified domain name of the nodes you would like to add in the cluster, then click Add.

4. Follow the instructions in the wizard to specify the two servers and the tests, and then run the tests. To fully validate your configuration, run all tests before creating a cluster. Click next

5. On the confirmation page, Click Next

6. The Summary page appears after the tests run. To view the results, click Report. Click Finish. You will be prompted to create a cluster if you select Create the Cluster now using validation nodes.

5. While still on the Summary page, click View Report and read the test results.

To view the results of the tests after you close the wizard, see

SystemRoot\Cluster\Reports\Validation Report date and time.html

where SystemRoot is the folder in which the operating system is installed (for example, C:\Windows).

6. As necessary, make changes in the configuration and rerun the tests.

Step4: Create a Failover cluster

1. To open the failover cluster snap-in, click Server Manager, click Tools, and then click Failover Cluster Manager.

2. Confirm that Failover Cluster Management is selected and then, in the center pane under Management, click Create a cluster. If you did not close the validation nodes then the validation wizard automatically open cluster creation wizard. Follow the instructions in the wizard to specify, Click Next

The servers to include in the cluster.

The name of the cluster i.e. virtual name of cluster

IP address of the virtual node

3. Verify the IP address and cluster node name and click Next

4. After the wizard runs and the Summary page appears, to view a report of the tasks the wizard performed, click View Report. Click Finish.

Step5: Verify Cluster Configuration

On the Cluster Manager, Click networks, right click on each network, Click Property, make sure Allow clients to connect through this network is unchecked for heartbeat network. verify IP range. Click Ok.

On the Cluster Manager, Click networks, right click on each network, Click Property, make sure Allow clients to connect through this network is checked for domain network. verify IP range. Click Ok.

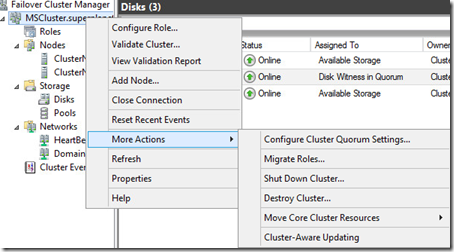

On the Cluster Manager, Click Storage, Click disks, verify quorum disk and shared disks are available. You can add multiple of disks by simply click Add new disk on the Task Pan.

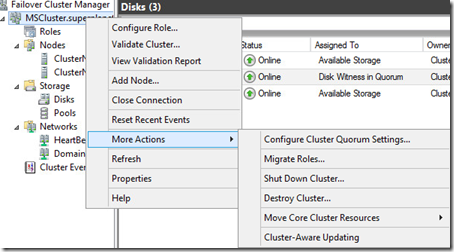

An automated MSCS cluster configuration will add quorum automatically. However you can manually configure desired cluster quorum by right clicking on cluster>More Actions>Configure Cluster Quorum Settings.

Configuring a Hyper-v Cluster

In the previous steps you have configured a MSCS cluster, to configure a Hyper-v cluster all you need to do is install Hyper-v role in each cluster node. from the Server Manager, Click Add roles and features, follow the screen and install Hyper-v role. A reboot is required to install Hyper-v role. Once role is installed in both node.

Note that at this stage add Storage for Virtual Machines and networks for Live Migration, Storage network if using iSCSI, Virtual Machine network, and Management Network. detailed configuration is out of scope for this article as I am writing about MSCS cluster not Hyper-v.

from the Cluster Manager, Right Click on Networks, Click Network for Live Migration, Select appropriate network for live Migration.

If you would like to have virtual machine additional fault tolerance like Hyper-v Replica, Right Click Cluster virtual node, Click Configure Role, Click Next.

From Select Role page, Click Hyper-v Replica broker, Click Next. Follow the screen.

From the Cluster manager, right Click on Roles, Click Virtual machine, Click New Hard Disk to configure virtual machine storage and virtual machine configuration disk drive. Once done, From the Cluster manager, right Click on Roles, Click Virtual machine, Click New Virtual machine to create virtual machine.

Backing up Clustered data, application or server

There are multiple methods for backing up information that is stored on Cluster Shared Volumes in a failover cluster running on

Windows Server 2008 R2

Hyper-V Server 2008 R2

Windows Server 2012

Hyper-V Server 2012

Operating System Level backup

The backup application runs within a virtual machine in the same way that a backup application runs within a physical server. When there are multiple virtual machines being managed centrally, each virtual machine can run a backup "agent" (instead of running an individual backup application) that is controlled from the central management server. Backup agent backs up application data, files, folder and systems state of operating systems.

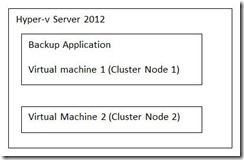

Hyper-V Image Level backup

The backup captures all the information about multiple virtual machines that are configured in a failover cluster that is using Cluster Shared Volumes. The backup application runs through Hyper-V, which means that it must use the VSS Hyper-V writer. The backup application must also be compatible with Cluster Shared Volumes. The backup application backs up the virtual machines that are selected by the administrator, including all the VHD files for those virtual machines, in one operation. VM1_Data.VHDX, VM2_data.VHDX and VM1_System.VHDX, VM2_system.VHDX are stored in a backup disk or tape. VM1_System.VHDX and VM2_System.VHDX contain system files and page files i.e. system state, snapshot and VM configuration are stored as well.

Publishing an Application or Service in a Failover Cluster Environment

1. To open the failover cluster snap-in, click Server Manager, click Tools, and then click Failover Cluster Manager.

2. Right Click on Roles, click Configure Role to publish a service or application

3. Select a Cluster Services or Application, and then click Next.

4. Follow the instructions in the wizard to specify the following details:

A name for the clustered file server

IP address of virtual node

5. On Select Storage page, Select the storage volume or volumes that the clustered file server should use. Click Next

6. On the confirmation Page, review and Click Next

7. After the wizard runs and the Summary page appears, to view a report of the tasks the wizard performed, click View Report.

8. To close the wizard, click Finish.

9. In the console tree, make sure Services and Applications is expanded, and then select the clustered file server that you just created.

10. After completing the wizard, confirm that the clustered file server comes online. If it does not, review the state of the networks and storage and correct any issues. Then right-click the new clustered application or service and click Bring this service or application online.

Perform a Failover Test

To perform a basic test of failover, right-click the clustered file server, click Move this service or application to another node, and click the available choice of node. When prompted, confirm your choice. You can observe the status changes in the center pane of the snap-in as the clustered file server instance is moved.

Configuring a New Failover Cluster by Using Windows PowerShell

Task

PowerShell command

Run validation tests on a list of servers.

Test-Cluster -Node server1,server2

Where server1 and server2 are servers that you want to validate.

Create a cluster using defaults for most settings.

New-Cluster -Name cluster1 -Node server1,server2

Where server1 and server2 are the servers that you want to include in the new cluster.

Configure a clustered file server using defaults for most settings.

Add-ClusterFileServerRole -Storage "Cluster Disk 4"

Where Cluster Disk 4 is the disk that the clustered file server will use.

Configure a clustered print server using defaults for most settings.

Add-ClusterPrintServerRole -Storage "Cluster Disk 5"

Where Cluster Disk 5 is the disk that the clustered print server will use.

Configure a clustered virtual machine using defaults for most settings.

Add-ClusterVirtualMachineRole -VirtualMachine VM1

Where VM1 is an existing virtual machine that you want to place in a cluster.

Add available disks.

Get-ClusterAvailableDisk | Add-ClusterDisk

Review the state of nodes.

Get-ClusterNode

Run validation tests on a new server.

Test-Cluster -Node newserver,node1,node2

Where newserver is the new server that you want to add to a cluster, and node1 and node2 are nodes in that cluster.

Prepare a node for maintenance.

Get-ClusterNode node2 | Get-ClusterGroup | Move-ClusterGroup

Where node2 is the node from which you want to move clustered services and applications.

Pause a node.

Suspend-ClusterNode node2

Where node2 is the node that you want to pause.

Resume a node.

Resume-ClusterNode node2

Where node2 is the node that you want to resume.

Stop the Cluster service on a node.

Stop-ClusterNode node2

Where node2 is the node on which you want to stop the Cluster service.

Start the Cluster service on a node.

Start-ClusterNode node2

Where node2 is the node on which you want to start the Cluster service.

Review the signature and other properties of a cluster disk.

Get-ClusterResource "Cluster Disk 2" | Get-ClusterParameter

Where Cluster Disk 2 is the disk for which you want to review the disk signature.

Move Available Storage to a particular node.

Move-ClusterGroup "Available Storage" -Node node1

Where node1 is the node that you want to move Available Storage to.

Turn on maintenance for a disk.

Suspend-ClusterResource "Cluster Disk 2"

Where Cluster Disk 2 is the disk in cluster storage for which you are turning on maintenance.

Turn off maintenance for a disk.

Resume-ClusterResource "Cluster Disk 2"

Where Cluster Disk 2 is the disk in cluster storage for which you are turning off maintenance.

Bring a clustered service or application online.

Start-ClusterGroup "Clustered Server 1"

Where Clustered Server 1 is a clustered server (such as a file server) that you want to bring online.

Take a clustered service or application offline.

Stop-ClusterGroup "Clustered Server 1"

Where Clustered Server 1 is a clustered server (such as a file server) that you want to take offline.

Move or Test a clustered service or application.

Move-ClusterGroup "Clustered Server 1"

Where Clustered Server 1 is a clustered server (such as a file server) that you want to test or move.

-

Choose the resource group or groups that you want to migrate.

-

Specify whether the resource groups to be migrated will use new storage or the same storage that you used in the old cluster. If the resource groups will use new storage, you can specify the disk that each resource group should use. Note that if new storage is used, you must handle all copying or moving of data or folders—the wizard does not copy data from one shared storage location to another.

-

If you are migrating from a cluster running Windows Server 2003 that has Network Name resources with Kerberos protocol enabled, specify the account name and password for the Active Directory account that is used by the Cluster service on the old cluster.

Migrating clustered services and applications to a new failover cluster

Use the following instructions to migrate clustered services and applications from your old cluster to your new cluster. After the Migrate a Cluster Wizard runs, it leaves most of the migrated resources offline, so that you can perform additional steps before you bring them online. If the new cluster uses old storage, plan how you will make LUNs or disks inaccessible to the old cluster and accessible to the new cluster (but do not make changes yet).

1. To open the failover cluster snap-in, click Administrative Tools, and then click Failover Cluster Manager.

2. In the console tree, if the cluster that you created is not displayed, right-click Failover Cluster Manager, click Manage a Cluster, and then select the cluster that you want to configure.

3. In the console tree, expand the cluster that you created to see the items underneath it.

4. If the clustered servers are connected to a network that is not to be used for cluster communications (for example, a network intended only for iSCSI), then under Networks, right-click that network, click Properties, and then click Do not allow cluster network communication on this network. Click OK.

5. In the console tree, select the cluster. Click Configure, click Migrate services and applications.

6. Read the first page of the Migrate a Cluster Wizard, and then click Next.

7. Specify the name or IP Address of the cluster or cluster node from which you want to migrate resource groups, and then click Next.

8. Click View Report. The wizard also provides a report after it finishes, which describes any additional steps that might be needed before you bring the migrated resource groups online.

9. Follow the instructions in the wizard to complete the following tasks:

-

After the wizard runs and the Summary page appears, click View Report.

-

If the new cluster will use old storage, follow your plan for making LUNs or disks inaccessible to the old cluster and accessible to the new cluster.

-

If the new cluster will use new storage, copy the appropriate folders and data to the storage. As needed for disk access on the old cluster, bring individual disk resources online on that cluster. (Keep other resources offline, to ensure that clients cannot change data on the disks in storage.) Also as needed, on the new cluster, use Disk Management to confirm that the appropriate LUNs or disks are visible to the new cluster and not visible to any other servers.

14. When the wizard completes, most migrated resources will be offline. Leave them offline at this stage.

Completing the transition from the old cluster to the new cluster. You must perform the following steps to complete the transition to the new cluster running Windows Server 2012.

1. Prepare for clients to experience downtime, probably brief.

2. Take each resource group offline on the old cluster.

3. Complete the transition for the storage:

-

View events in Failover Cluster Manager. To do this, in the console tree, right-click Cluster Events, and then click Query. In the Cluster Events Filter dialog box, select the criteria for the events that you want to display, or to return to the default criteria, click the Reset button. Click OK. To sort events, click a heading, for example, Level or Date and Time.

-

Confirm that necessary services, applications, or server roles are installed on all nodes. Confirm that services or applications are compatible with Windows Server 2008 R2 and run as expected.

-

If you used old storage for the new cluster, rerun the Validate a Cluster Configuration Wizard to confirm the validation results for all LUNs or disks in the storage.

-

Review migrated resource settings and dependencies.

-

If you migrated one or more Network Name resources with Kerberos protocol enabled, confirm that the following permissions change was made in Active Directory Users and Computers on a domain controller. In the computer accounts (computer objects) of your Kerberos protocol-enabled Network Name resources, Full Control must be assigned to the computer account for the failover cluster.

4. If the new cluster uses mount points, adjust the mount points as needed, and make each disk resource that uses a mount point dependent on the resource of the disk that hosts the mount point.

5. Bring the migrated services or applications online on the new cluster. To perform a basic test of failover on the new cluster, expand Services and Applications, and then click a migrated service or application that you want to test.

6. To perform a basic test of failover for the migrated service or application, under Actions (on the right), click Move this service or application to another node, and then click an available choice of node. When prompted, confirm your choice. You can observe the status changes in the center pane of the snap-in as the clustered service or application is moved.

7. If there are any issues with failover, review the following:

-

Right-click Parameters, click New, click String Value, and for the name of the new value, type: ServiceMain

-

Right-click the new value (ServiceMain), click Modify, and for the value data, type: ServiceEntry

-

Right-click Parameters again, click New, click Expandable String Value, and for the name of the new value, type: ServiceDll

-

Right-click the new value (ServiceDll), click Modify, and for the value data, type: %systemroot%\system32\dhcpssvc.dll

Migrating Cluster Resource with new Mount Point

When you are working with new storage for your cluster migration, you have some flexibility in the order in which you complete the tasks. The tasks that you must complete include creating the mount points, running the Migrate a Cluster Wizard, copying the data to the new storage, and confirming the disk letters and mount points for the new storage. After completing the other tasks, configure the disk resource dependencies in Failover Cluster Manager.

A useful way to keep track of disks in the new storage is to give them labels that indicate your intended mount point configuration. For example, in the new storage, when you are mounting a new disk in a folder called \Mount1-1 on another disk, you can also label the mounted disk as Mount1-1. (This assumes that the label Mount1-1 is not already in use in the old storage.) Then when you run the Migrate a Cluster Wizard and you need to specify that disk for a particular migrated resource, you can look at the list and select the disk labeled Mount1-1. Then you can return to Failover Cluster Manager to configure the disk resource for Mount1-1 so that it is dependent on the appropriate resource, for example, the resource for disk F. Similarly, you would configure the disk resources for all other disks mounted on disk F so that they depended on the disk resource for disk F.

Migrating DHCP to a Cluster Running Windows Server 2012

A failover cluster is a group of independent computers that work together to increase the availability of applications and services. The clustered servers (called nodes) are connected by physical cables and by software. If one of the cluster nodes fails, another node begins to provide service (a process known as failover). Users experience a minimum of disruptions in service.

This guide describes the steps that are necessary when migrating a clustered DHCP server to a cluster running Windows Server 2008 R2, beyond the standard steps required for migrating clustered services and applications in general. The guide indicates when to use the Migrate a Cluster Wizard in the migration, but does not describe the wizard in detail.

Step 1: Review requirements and create a cluster running Windows Server 2012

Before beginning the migration described in this guide, review the requirements for a cluster running Windows Server 2008 R2, install the failover clustering feature on servers running Windows Server 2008 R2, and create a new cluster.

Step 2: On the old cluster, adjust registry settings and permissions before migration

To prepare for migration, you must make changes to registry settings and permissions on each node of the old cluster.

1. Confirm that you have a current backup of the old cluster, one that includes the configuration information for the clustered DHCP server (also called the DHCP resource group).

2. Confirm that the clustered DHCP server is online on the old cluster. It must be online while you complete the remainder of this procedure.

3. On a node of the old cluster, open a command prompt as an administrator.

4. Type: regedit Navigate to:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\services\DHCPServer\Parameters

5. Choose the option that applies to your cluster: If the old cluster is running Windows Server 2008, skip to step 7. If the old cluster is running Windows Server 2003 or Windows Server 2003 R2:

-

On Windows Server 2008: Click Locations, select the local server, and then click OK. Under Enter the object names to select, type NT Service\DHCPServer. Click OK. Select the DHCPServer account and then select the check box for Full Control.

-

On Windows Server 2003 or Windows Server 2003 R2: Click Locations, ensure that the domain name is selected, and then click OK. Under Enter the object names to select, type Everyone, and then click OK (and confirm your choice if prompted). Under Group or user names, select Everyone and then select the check box for Full Control.

6. Right-click Parameters, and then click Permissions.

7. Click Add. Locate the appropriate account and assign permissions:

8. Repeat the process on the other node or nodes of the old cluster.

Step 3: On a node in the old cluster, prepare for export, and then export the DHCP database to a file

As part of migrating a clustered DHCP server, on the old cluster, you must export the DHCP database to a file. This requires preparatory steps that prevent the cluster from restarting the clustered DHCP resource during the export. The following procedure describes the process. On the old cluster, start the clustering snap-in and configure the restart setting for the clustered DHCP server (DHCP resource group):

1. Click Start, click Administrative Tools, and then click Failover Cluster Management. If the User Account Control dialog box appears, confirm that the action it displays is what you want, and then click Continue.

2. If the console tree is collapsed, expand the tree under the cluster that you are migrating settings from. Expand Services and Applications and then, in the console tree, click the clustered DHCP server.

3. In the center pane, right-click the DHCP server resource, click Properties, click the Policies tab, and then click If resource fails, do not restart.

This step prevents the resource from restarting during the export of the DHCP database, which would stop the export.

1. On the node of the old cluster that currently owns the clustered DHCP server, confirm that the clustered DHCP server is running. Then open a command prompt window as an administrator.

2. Type: netsh dhcp server export <exportfile> all

Where <exportfile> is the name of the file to which you want to export the DHCP database.

3. After the export is complete, in the clustering interface (Cluster Administrator or Failover Cluster Management), right-click the clustered DHCP server (DHCP resource group) and then click either Take Offline or Take this service or application offline. If the command is unavailable, in the center pane, right-click each online resource and click either Take Offline or Take this resource offline. If prompted for confirmation, confirm your choice.

4. If the old cluster is running Windows Server 2003 or Windows Server 2003 R2, obtain the account name and password for the Cluster service account (the Active Directory account used by the Cluster service on the old cluster). Alternatively, you can obtain the name and password of another account that has access permissions for the Active Directory computer accounts (objects) that the old cluster uses. For a migration from a cluster running Windows Server 2003 or Windows Server 2003 R2, you will need this information for the next procedure.

Step 4: On the new cluster, configure a network for DHCP clients and run the Migrate a Cluster Wizard

Microsoft recommends that you make the network settings on the new cluster as similar as possible to the settings on the old cluster. In any case, on the new cluster, you must have at least one network that DHCP clients can use to communicate with the cluster. The following procedure describes the cluster setting needed on the client network, and indicates when to run the Migrate a Cluster Wizard.

1. On the new cluster (running Windows Server 2012), click Server Manager, click Tools, and then click Failover Cluster Manager.

2. If the cluster that you want to configure is not displayed, in the console tree, right-click Failover Cluster Manager, click Manage a Cluster, and then select or specify the cluster that you want.

3. If the console tree is collapsed, expand the tree under the cluster.

4. Expand Networks, right-click the network that clients will use to connect to the DHCP server, and then click Properties.

5. Make sure that Allow cluster network communication on this network and Allow clients to connect through this network are selected.

6. To prepare for the migration process, find and take note of the drive letter used for the DHCP database on the old cluster. Ensure that the same drive letter exists on the new cluster. (This drive letter is one of the settings that the Migrate a Cluster Wizard will migrate.)

7. In Failover Cluster Manager, in the console tree, select the new cluster, and then under Configure, click Migrate services and applications.

8. Use the Migrate a Cluster Wizard to migrate the DHCP resource group from old to the new cluster. If you are using new storage on the new cluster, during the migration, be sure to specify the disk that has the same drive letter on the new cluster as was used for the DHCP database on the old cluster. The wizard will migrate resources and settings, but not the DHCP database.

Step 5: On the new cluster, import the DHCP database, bring the clustered DHCP server online, and adjust permissions

To complete the migration process, import the DHCP database that you exported to a file in Step 2. Then you can bring the clustered DHCP server online and adjust settings that were changed temporarily during the migration process.

1. If you are reusing the old cluster storage for the new cluster, confirm that you have stored the exported DHCP database file in a safe location. Then be sure to delete all the DHCP files other than the exported DHCP database file from the old storage. This includes the DHCP database, log, and backup files.

2. On the new cluster, in Failover Cluster Manager, expand Services and Applications, right-click the clustered DHCP server, and then click Bring this service or application online. The DHCP service starts with an empty database.

3. Click the clustered DHCP server.

4. In the center pane, right-click the DHCP server resource, click Properties, click the Policies tab, and then click If resource fails, do not restart. This step prevents the resource from restarting during the import of the DHCP database, which would stop the import.

5. In the new cluster, on the node that currently owns the migrated DHCP server, view the disk used by the migrated DHCP server, and make sure that you have copied the exported DHCP database file to this disk.

6. In the new cluster, on the node that currently owns the migrated DHCP server, open a command prompt as an administrator. Change to the disk used by the migrated DHCP server.

7. Type: netsh dhcp server import <exportfile>

Where <exportfile> is the filename of the file to which you exported the DHCP database.

8. If the migrated DHCP server is not online, in Failover Cluster Manager, under Services and Applications, right-click the migrated DHCP server, and then click Bring this service or application online.

9. In the center pane, right-click the DHCP server resource, click Properties, click the Policies tab, and then click If resource fails, attempt restart on current node.

This returns the resource to the expected setting, instead of the "do not restart" setting that was temporarily needed during the import of the DHCP database.

10. If the cluster was migrated from Windows Server 2003 or Windows Server 2003 R2, after the clustered DHCP server is online on the new cluster, make the following changes to permissions in the registry:

On the node that owns the clustered DHCP server, open a command prompt as an administrator.

Type: regedit Navigate to:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\services\DHCPServer\Parameters

Right-click Parameters, and then click Permissions.

Click Add, click Locations, and then select the local server.

Under Enter the object names to select, type NT Service\DHCPServer and then click OK. Select the DHCPServer account and then select the check box for Full Control. Then click Apply.

Select the Everyone account (created through steps earlier in this topic) and then click Remove. This removes the account from the list of those that are assigned permissions.

11. Perform the preceding steps only after DHCP is online on the new cluster. After you complete these steps, you can test the clustered DHCP server and begin to provide DHCP services to clients.

Configuring Print Server Cluster

Open Failover Cluster Management. In the console tree, if the cluster that you created is not displayed, right-click Failover Cluster Management, click Manage a Cluster, and then select the cluster you want to configure.

Click Services and Applications. Under Actions (on the right), click Configure a Service or Application. then click Next. Click Print Server, and then click Next.

Follow the instructions in the wizard to specify the following details: A name for the clustered print server, Any IP address and t he storage volume or volumes that the clustered print server should use

After the wizard runs and the Summary page appears, to view a report of the tasks the wizard performed, click View Report.To close the wizard, click Finish.

In the console tree, make sure Services and Applications is expanded, and then select the clustered print server that you just created.

Under Actions, click Manage Printers.

An instance of the Failover Cluster Management interface appears with Print Management in the console tree.

Under Print Management, click Print Servers and locate the clustered print server that you want to configure.

Always perform management tasks on the clustered print server. Do not manage the individual cluster nodes as print servers.

Right-click the clustered print server, and then click Add Printer. Follow the instructions in the wizard to add a printer.

This is the same wizard you would use to add a printer on a nonclustered server.

When you have finished configuring settings for the clustered print server, to close the instance of the Failover Cluster Management interface with Print Management in the console tree, click File and then click Exit.

To perform a basic test of failover, right-click the clustered print server instance, click Move this service or application to another node, and click the available choice of node. When prompted, confirm your choice.

Configuring a Multisite SQL Server Failover Cluster

To install or upgrade a SQL Server failover cluster, you must run the Setup program on each node of the failover cluster. To add a node to an existing SQL Server failover cluster, you must run SQL Server Setup on the node that is to be added to the SQL Server failover cluster instance. Do not run Setup on the active node to manage the other nodes. The following options are available for SQL Server failover cluster installation:

Option1: Integration Installation with Add Node

Create and configure a single-node SQL Server failover cluster instance. When you configure the node successfully, you have a fully functional failover cluster instance. At this point, it does not have high availability because there is only one node in the failover cluster. On each node to be added to the SQL Server failover cluster, run Setup with Add Node functionality to add that node.

Option 2: Advanced/Enterprise Installation

After you run the Prepare Failover Cluster on one node, Setup creates the Configuration.ini file that lists all the settings that you specified. On the additional nodes to be prepared, instead of following these steps, you can supply the autogenerated ConfigurationFile.ini file from first node as an input to the Setup command line. This step prepares the nodes ready to be clustered, but there is no operational instance of SQL Server at the end of this step.

After the nodes are prepared for clustering, run Setup on one of the prepared nodes. This step configures and finishes the failover cluster instance. At the end of this step, you will have an operational SQL Server failover cluster instance and all the nodes that were prepared previously for that instance will be the possible owners of the newly-created SQL Server failover cluster.

Follow the procedure to install a new SQL Server failover cluster using Integrated Simple Cluster Install

Insert the SQL Server installation media, and from the root folder, double-click Setup.exe. To install from a network share, browse to the root folder on the share, and then double-click Setup.exe.

-

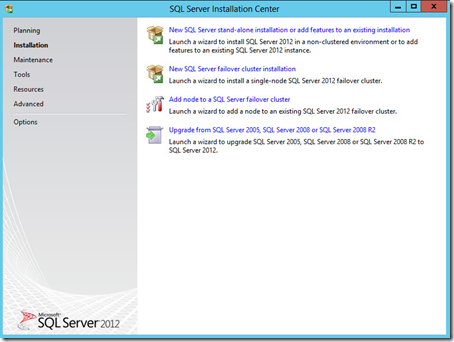

The Installation Wizard starts the SQL Server Installation Center. To create a new cluster installation of SQL Server, click New SQL Server failover cluster installation on the installation page

The System Configuration Checker runs a discovery operation on your computer. To continue, click OK.

You can view the details on the screen by clicking Show Details, or as an HTML report by clicking View detailed report. To continue, click Next.

On the Setup Support Files page, click Install to install the Setup support files.

The System Configuration Checker verifies the system state of your computer before Setup continues. After the check is complete, click Next to continue.

You can view the details on the screen by clicking Show Details, or as an HTML report by clicking View detailed report.

On the Product key page, indicate whether you are installing a free edition of SQL Server, or whether you have a PID key for a production version of the product.

On the License Terms page, read the license agreement, and then select the check box to accept the license terms and conditions.

To help improve SQL Server, you can also enable the feature usage option and send reports to Microsoft. Click Next to continue.

On the Feature Selection page, select the components for your installation. You can select any combination of check boxes, but only the Database Engine and Analysis Services support failover clustering. Other selected components will run as a stand-alone feature without failover capability on the current node that you are running Setup on.

The prerequisites for the selected features are displayed on the right-hand pane. SQL Server Setup will install the prerequisite that are not already installed during the installation step described later in this procedure. SQL Server setup runs one more set of rules that are based on the features you selected to validate your configuration.

On the Instance Configuration page, specify whether to install a default or a named instance. SQL Server Network Name — Specify a network name for the new SQL Server failover cluster. that is the name of virtual node of the cluster. This is the name that is used to identify your failover cluster on the network. Instance ID — By default, the instance name is used as the Instance ID. This is used to identify installation directories and registry keys for your instance of SQL Server. This is the case for default instances and named instances. For a default instance, the instance name and instance ID would be MSSQLSERVER. To use a nondefault instance ID, select the Instance ID box and provide a value. Instance root directory — By default, the instance root directory is C:\Program Files\Microsoft SQL Server\. To specify a nondefault root directory, use the field provided, or click the ellipsis button to locate an installation folder.

Detected SQL Server instances and features on this computer – The grid shows instances of SQL Server that are on the computer where Setup is running. If a default instance is already installed on the computer, you must install a named instance of SQL Server. Click Next to continue.

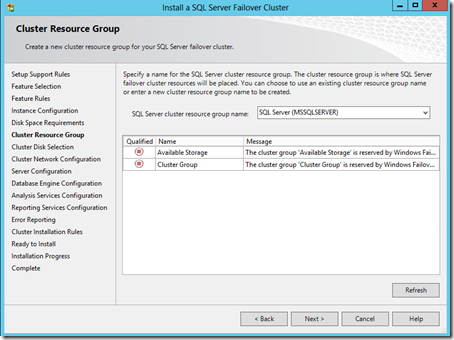

The Disk Space Requirements page calculates the required disk space for the features that you specify, and compares requirements to the available disk space on the computer where Setup is running. Use the Cluster Resource Group page to specify the cluster resource group name where SQL Server virtual server resources will be located. To specify the SQL Server cluster resource group name, you have two options:

-

Use the drop-down box to specify an existing group to use.

-

Type the name of a new group to create. Be aware that the name "Available storage" is not a valid group name.

On the Cluster Disk Selection page, select the shared cluster disk resource for your SQL Server failover cluster. More than one disk can be specified. Click Next to continue.

On the Cluster Network Configuration page, Specify the IP type and IP address for your failover cluster instance. Click Next to continue. Note that the IP address will resolve the name of the virtual node which you have mentioned earlier step.

On the Server Configuration — Service Accounts page, specify login accounts for SQL Server services. The actual services that are configured on this page depend on the features that you selected to install.

Use this page to specify Cluster Security Policy. Use default setting. Click Next to continue. Work flow for the rest of this topic depends on the features that you have specified for your installation. You might not see all the pages, depending on your selections (Database Engine, Analysis Services, Reporting Services).

You can assign the same login account to all SQL Server services, or you can configure each service account individually. The startup type is set to manual for all cluster-aware services, including full-text search and SQL Server Agent, and cannot be changed during installation. Microsoft recommends that you configure service accounts individually to provide least privileges for each service, where SQL Server services are granted the minimum permissions they have to have complete their tasks. To specify the same logon account for all service accounts in this instance of SQL Server, provide credentials in the fields at the bottom of the page. When you are finished specifying login information for SQL Server services, click Next.

-

Use the Server Configuration – Collation tab, use default collations for the Database Engine and Analysis Services.

-

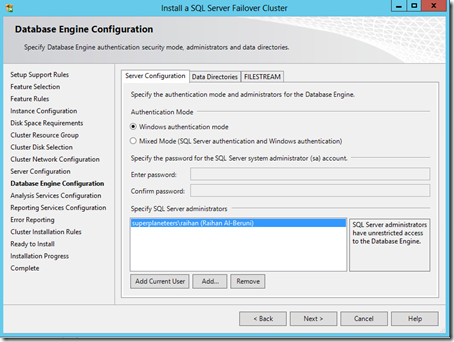

Use the Database Engine Configuration — Account Provisioning page to specify the following:

-

select Windows Authentication or Mixed Mode Authentication for your instance of SQL Server.

Use the Database Engine Configuration – Data Directories page to specify nondefault installation directories. To install to default directories, click Next. Use the Database Engine Configuration – FILESTREAM page to enable FILESTREAM for your instance of SQL Server. Click Next to continue.

When you are finished editing the list, click OK. Verify the list of administrators in the configuration dialog box. When the list is complete, click Next.

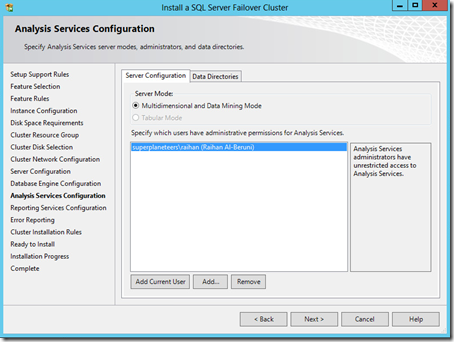

Use the Analysis Services Configuration — Account Provisioning page to specify users or accounts that will have administrator permissions for Analysis Services. You must specify at least one system administrator for Analysis Services. To add the account under which SQL Server Setup is running, click Add Current User. To add or remove accounts from the list of system administrators, click Add or Remove, and then edit the list of users, groups, or computers that will have administrator privileges for Analysis Services. When you are finished editing the list, click OK. Verify the list of administrators in the configuration dialog box. When the list is complete, click Next.

Use the Analysis Services Configuration — Data Directories page to specify nondefault installation directories. To install to default directories, click Next.

Use the Reporting Services Configuration page to specify the kind of Reporting Services installation to create. For failover cluster installation, the option is set to Unconfigured Reporting Services installation. You must configure Reporting Services services after you complete the installation. However, no harm to select Install and configure option if you are not an SQL expert.

On the Error Reporting page, specify the information that you want to send to Microsoft that will help improve SQL Server. By default, options for error reporting is disabled.

The System Configuration Checker runs one more set of rules to validate your configuration with the SQL Server features that you have specified.

The Ready to Install page displays a tree view of installation options that were specified during Setup. To continue, click Install. Setup will first install the required prerequisites for the selected features followed by the feature installation.

During installation, the Installation Progress page provides status so that you can monitor installation progress as Setup continues. After installation, the Complete page provides a link to the summary log file for the installation and other important notes. To complete the SQL Server installation process, click Close.

If you are instructed to restart the computer, do so now. It is important to read the message from the Installation Wizard when you have finished with Setup.

To add nodes to the single-node failover you just created, run Setup on each additional node and follow the steps for Add Node operation.

SQL Advanced/Enterprise Failover Cluster Install

Step1: Prepare Environment

Insert the SQL Server installation media, and from the root folder, double-click Setup.exe.

Windows Installer 4.5 is required, and may be installed by the Installation Wizard. If you are prompted to restart your computer, restart and then start SQL Server Setup again.

After the prerequisites are installed, the Installation Wizard starts the SQL Server Installation Center. To prepare the node for clustering, move to the Advanced page and then click Advanced cluster preparation

The System Configuration Checker runs a discovery operation on your computer. To continue, click OK. You can view the details on the screen by clicking Show Details, or as an HTML report by clicking View detailed report.

On the Setup Support Files page click Install to install the Setup support files.

The System Configuration Checker verifies the system state of your computer before Setup continues. After the check is complete, click Next to continue. You can view the details on the screen by clicking Show Details, or as an HTML report by clicking View detailed report.

On the Language Selection page, you can specify the language, to continue, click Next

On the Product key page, select PIDed product key, Click Next

On the License Terms page, accept the license terms and Click Next to continue.

On the Feature Selection page, select the components for your installation as you did for simple installation which has been mentioned earlier.

The Ready to Install page displays a tree view of installation options that were specified during Setup. To continue, click Install. Setup will first install the required prerequisites for the selected features followed by the feature installation.

To complete the SQL Server installation process, click Close.

If you are instructed to restart the computer, do so now.

Repeat the previous steps to prepare the other nodes for the failover cluster. You can also use the autogenerated configuration file to run prepare on the other nodes. A configurationfile.ini is generated in C:\Program Files\Microsoft SQL Server\110\Setup BootStrap\Log\20130603_014118\configurationfile.ini which is shown below.

Step2 Install SQL Server

After preparing all the nodes as described in the prepare step , run Setup on one of the prepared nodes, preferably the one that owns the shared disk. On the Advanced page of the SQL Server Installation Center, click Advanced cluster completion.

The System Configuration Checker runs a discovery operation on your computer. To continue, click OK. You can view the details on the screen by clicking Show Details, or as an HTML report by clicking View detailed report.

On the Setup Support Files page, click Install to install the Setup support files.

The System Configuration Checker verifies the system state of your computer before Setup continues. After the check is complete, click Next to continue. You can view the details on the screen by clicking Show Details, or as an HTML report by clicking View detailed report.

On the Language Selection page, you can specify the language, To continue, click Next.

Use the Cluster node configuration page to select the instance name prepared for clustering

Use the Cluster Resource Group page to specify the cluster resource group name where SQL Server virtual server resources will be located. On the Cluster Disk Selection page, select the shared cluster disk resource for your SQL Server failover cluster.Click Next to continue

On the Cluster Network Configuration page, specify the network resources for your failover cluster instance. Click Next to continue.

Now follow the simple installation steps to select Database Engine, reporting, Analysis and Integration services.

The Ready to Install page displays a tree view of installation options that were specified during Setup. To continue, click Install. Setup will first install the required prerequisites for the selected features followed by the feature installation.

Once installation is completed, click Close.

Follow the procedure if you would like to remove a node from an existing SQL Server failover cluster

Insert the SQL Server installation media. From the root folder, double-click setup.exe. To install from a network share, navigate to the root folder on the share, and then double-click Setup.exe.

The Installation Wizard launches the SQL Server Installation Center. To remove a node to an existing failover cluster instance, click Maintenance in the left-hand pane, and then select Remove node from a SQL Server failover cluster.

The System Configuration Checker will run a discovery operation on your computer. To continue, click OK.

After you click install on the Setup Support Files page, the System Configuration Checker verifies the system state of your computer before Setup continues. After the check is complete, click Next to continue.

On the Cluster Node Configuration page, use the drop-down box to specify the name of the SQL Server failover cluster instance to be modified during this Setup operation. The node to be removed is listed in the Name of this node field.

The Ready to Remove Node page displays a tree view of options that were specified during Setup. To continue, click Remove.

During the remove operation, the Remove Node Progress page provides status.

The Complete page provides a link to the summary log file for the remove node operation and other important notes. To complete the SQL Server remove node, click Close.

Using Command Line Installation of SQL Server

1. To install a new, stand-alone instance with the SQL Server Database Engine, Replication, and Full-Text Search component, run the following command

Setup.exe /q /ACTION=Install /FEATURES=SQL /INSTANCENAME=MSSQLSERVER

/SQLSVCACCOUNT="<DomainName\UserName>" /SQLSVCPASSWORD

2. To prepare a new, stand-alone instance with the SQL Server Database Engine, Replication, and Full-Text Search components, and Reporting Services. run the following command

Setup.exe /q /ACTION=PrepareImage /FEATURES=SQL,RS /InstanceID =<MYINST> /IACCEPTSQLSERVERLICENSETERMS

3. To complete a prepared, stand-alone instance that includes SQL Server Database Engine, Replication, and Full-Text Search components run the following command

Setup.exe /q /ACTION=CompleteImage /INSTANCENAME=MYNEWINST /INSTANCEID=<MYINST>

/SQLSVCACCOUNT="<DomainName\UserName>" /SQLSVCPASSWORD

4. To upgrade an existing instance or failover cluster node from SQL Server 2005, SQL Server 2008, or SQL Server 2008 R2.

Setup.exe /q /ACTION=upgrade /INSTANCEID = <INSTANCEID>/INSTANCENAME=MSSQLSERVER /RSUPGRADEDATABASEACCOUNT="<Provide a SQL DB Account>" /IACCEPTSQLSERVERLICENSETERMS

5. To upgrade an existing instance of SQL Server 2012 to a different edition of SQL Server 2012.

Setup.exe /q /ACTION=editionupgrade /INSTANCENAME=MSSQLSERVER /PID=<PID key for new edition>" /IACCEPTSQLSERVERLICENSETERMS

6. To install an SQL server using configuration file, run the following command

Setup.exe /ConfigurationFile=MyConfigurationFile.INI

7. To install an SQL server using configuration file and provide service Account password, run the following command

Setup.exe /SQLSVCPASSWORD="typepassword" /AGTSVCPASSWORD="typepassword"

/ASSVCPASSWORD="typepassword" /ISSVCPASSWORD="typepassword" /RSSVCPASSWORD="typepassword"

/ConfigurationFile=MyConfigurationFile.INI

8. To uninstall an existing instance of SQL Server. run the following command

Setup.exe /Action=Uninstall /FEATURES=SQL,AS,RS,IS,Tools /INSTANCENAME=MSSQLSERVER

Reference and Further Reading

Windows Storage Server 2012

Virtualizing Microsoft SQL Server

The Perfect Combination: SQL Server 2012, Windows Server 2012 and System Center 2012

EMC Storage Replication

Download Hyper-v Server 2012

Download Windows Server 2012

How To Create A Print Server Cluster In Windows 2016

Source: https://araihan.wordpress.com/tag/print-server/

Posted by: dollarsedid1987.blogspot.com

0 Response to "How To Create A Print Server Cluster In Windows 2016"

Post a Comment